Polygons Vs. Triangles

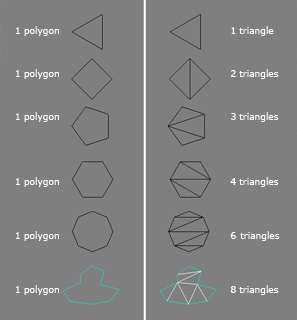

When a game artist talks about the poly count of a model, they really mean the triangle count. Games use triangles not polygons because most modern graphic hardware is built to accelerate the rendering of triangles.

The polygon count that's reported in a modeling app is always misleading, because the triangle count is higher. Polygons are always converted into triangles when loaded in a game engine. If you're using a polygon counting tool in your modeling app, it's best to switch it to count triangles so you're using the same counting method everyone else is using.

|

| Image by Michael "cryrid" Taylor |

When a model is exported to a game engine, the polygons are all converted into triangles automatically. However different tools will create different triangle layouts within those polygons. A quad can end up either as a "ridge" or as a "valley" depending on how it's triangulated. Artists need to carefully examine a new model in the game engine to see if the triangle edges are turned the way they wish. If not, specific polygons can then be triangulated manually.

|

| Image by Eric Chadwick |

When using a Normal Map some tools may require an artist to convert the model into all triangles before baking. If the triangles are flipped differently when the model is exported than they were when the normal map was baked, this can cause the final normal-mapped lighting to zig-zag across the model. Triangulating before baking will solve this.

Polygons have a useful purpose for game artists. A model made of mostly four-sided polygons (quads) will work well with edge-loop selection & transform methods that speed up modeling. This makes it easier to judge the "flow" of a model, and to weight a skinned model to its bones. Artists try to preserve these polygons in their models as long as possible.

Triangle Count vs. Vertex Count

Vertex count is ultimately more important for performance and memory than the triangle count, but for historical reasons artists more commonly use triangle count as a performance measurement.

On the most basic level, the triangle count and the vertex count can be similar if the all the triangles are connected to one another. 1 triangle uses 3 vertices, 2 triangles use 4 vertices, 3 triangles use 5 vertices, 4 triangles use 6 vertices and so on.

However, seams in UVs, changes to shading/smoothing groups, and material changes from triangle to triangle... are all treated as a physical break in the model's surface, when the model is rendered by the game. The vertices must be duplicated at these breaks, so the model can be sent in renderable chunks to the graphics card.

Overuse of smoothing groups, over-splittage of UVs, too many material assignments (and too much misalignment of these three properties), all of these lead to a much larger vertex count. This can stress the transform stages for the model, slowing performance. It can also increase the memory cost for the mesh because there are more vertices to send and store.

Rendering:

Rendering is the final process of creating the actual 2D image or animation from the prepared scene. This can be compared to taking a photo or filming the scene after the setup is finished in real life. Several different, and often specialized, rendering methods have been developed. These range from the distinctly non-realistic wireframe rendering through polygon-based rendering, to more advanced techniques such as: scanline rendering, ray tracing, or radiosity. Rendering may take from fractions of a second to days for a single image/frame. In general, different methods are better suited for either photo-realistic rendering, or real-time rendering.

Real-time

Rendering for interactive media, such as games and simulations, is calculated and displayed in real time, at rates of approximately 20 to 120 frames per second. In real-time rendering, the goal is to show as much information as possible as the eye can process in a fraction of a second, i.e. one frame. The primary goal is to achieve an as high as possible degree of photorealism at an acceptable minimum rendering speed (usually 24 frames per second, as that is the minimum the human eye needs to see to successfully create the illusion of movement). In fact, exploitations can be applied in the way the eye 'perceives' the world, and as a result the final image presented is not necessarily that of the real-world, but one close enough for the human eye to tolerate. Rendering software may simulate such visual effects as lens flares, depth of field or motion blur. These are attempts to simulate visual phenomena resulting from the optical characteristics of cameras and of the human eye. These effects can lend an element of realism to a scene, even if the effect is merely a simulated artefact of a camera. This is the basic method employed in games, interactive worlds and VRML. The rapid increase in computer processing power has allowed a progressively higher degree of realism even for real-time rendering, including techniques such as HDR rendering. Real-time rendering is often polygonal and aided by the computer's GPU.

Non Real-time

Animations for non-interactive media, such as feature films and video, are rendered much more slowly. Non-real time rendering enables the leveraging of limited processing power in order to obtain higher image quality. Rendering times for individual frames may vary from a few seconds to several days for complex scenes. Rendered frames are stored on a hard disk then can be transferred to other media such as motion picture film or optical disk. These frames are then displayed sequentially at high frame rates, typically 24, 25, or 30 frames per second, to achieve the illusion of movement.

When the goal is photo-realism, techniques such as ray tracing or radiosity are employed. This is the basic method employed in digital media and artistic works. Techniques have been developed for the purpose of simulating other naturally-occurring effects, such as the interaction of light with various forms of matter. Examples of such techniques include particle systems (which can simulate rain, smoke, or fire), volumetric sampling (to simulate fog, dust and other spatial atmospheric effects), caustics (to simulate light focusing by uneven light-refracting surfaces, such as the light ripples seen on the bottom of a swimming pool), and subsurface scattering (to simulate light reflecting inside the volumes of solid objects such as human skin).

The rendering process is computationally expensive, given the complex variety of physical processes being simulated. Computer processing power has increased rapidly over the years, allowing for a progressively higher degree of realistic rendering. Film studios that produce computer-generated animations typically make use of a render farm to generate images in a timely manner. However, falling hardware costs mean that it is entirely possible to create small amounts of 3D animation on a home computer system. The output of the renderer is often used as only one small part of a completed motion-picture scene. Many layers of material may be rendered separately and integrated into the final shot using compositing software.

Reflection/Scattering - How light interacts with the surface at a given point

Shading - How material properties vary across the surface